Blender¶

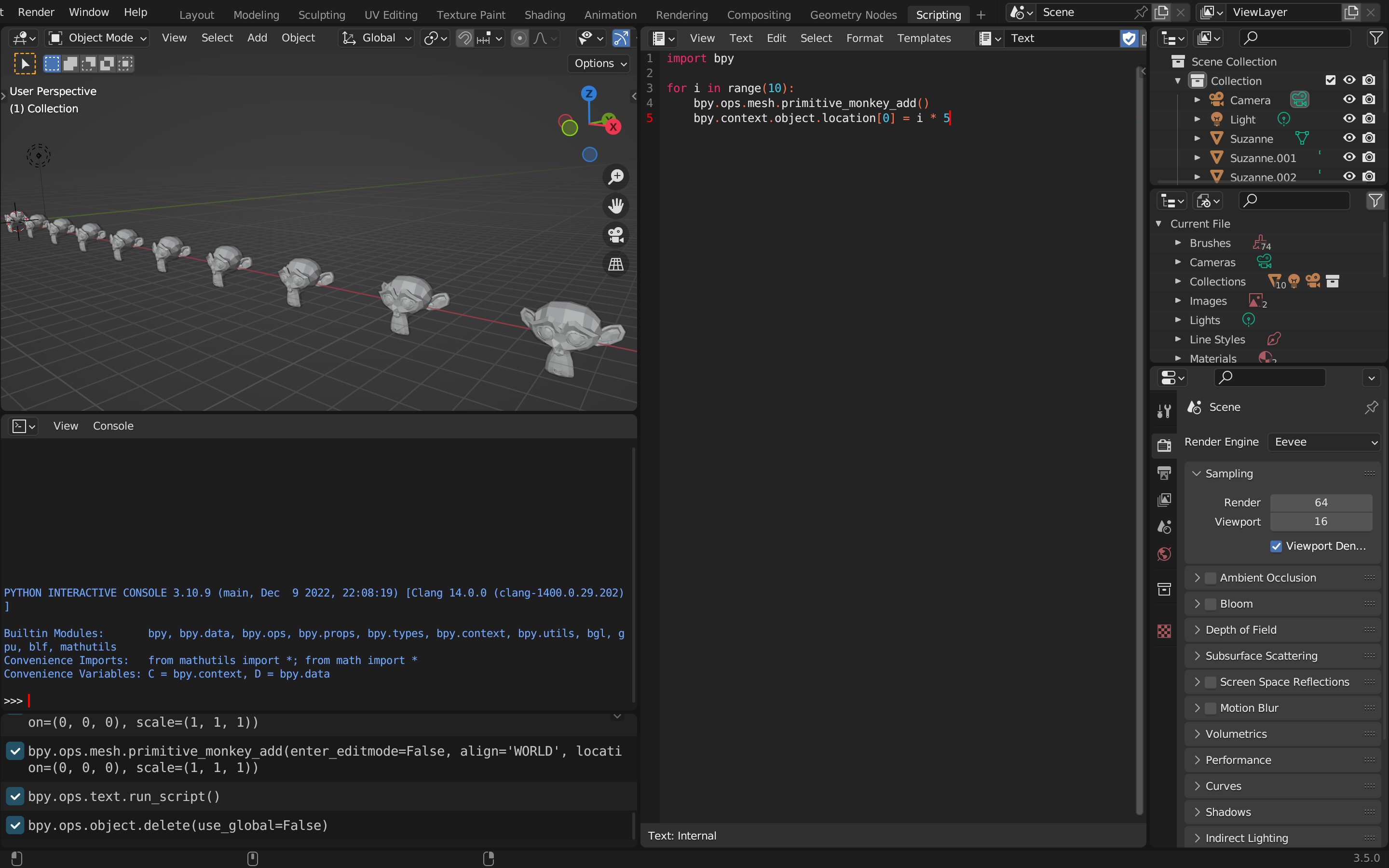

This seminar, about Blender as Interface, focused on the Python interface of Blender and how it can be used to connect with the external world. The seminar opened my eyes to the vast possibilities that Blender offers beyond 3D modeling and animation. In this reflection, I will show my learnings and explain some of my key takeaways from the seminar.

Blender + Phyton

Blender Controled through Mobile Phone Sensors

Blender + Phyton + Arduino

Led Strip

Reflection¶

Firstly, I learned that, as Blender is an open-source software, it has a robust Python API that allows developers to create custom tools and add-ons to enhance the functionality of Blender. The API provides access to almost all aspects of Blender, including objects, meshes, lights, cameras, and animation data. This feature enables users to automate repetitive tasks, streamline workflows, and create custom solutions that fit their specific needs.

Secondly, the seminar highlighted how Blender can be used as a real-time interface for networked sensors. This means that you can connect sensors, such as cameras or microphones, to Blender and use the input in real-time. For instance, you can use a camera feed to create a virtual reality experience or control the movement of a virtual object in response to a sound input. The possibilities are endless, and it’s fascinating to see how Blender can be used beyond its traditional scope.

The seminar also showcased how Blender can be used to control external devices such as LEDs connected to an Arduino. By sending data through the Python API, users can control the state of LEDs and create complex lighting setups that respond to events or actions in Blender. This feature enables users to create interactive installations or control the lighting of a physical space using Blender. This software also can plan the path of a robot. By importing CAD files of the robot and its environment into Blender, users can simulate the movement of the robot and plan its path using Blender’s animation tools. This feature enables users to test and optimize the robot’s movement before deploying it in the real world.

In conclusion, the seminar on Blender as Interface was an eye-opening experience that showed me the vast possibilities of Blender beyond 3D modeling and animation. I learned new possibilities to use a digital software to have a physical interaction. These features showcase the versatility of Blender and demonstrate its potential as a powerful tool for developers, engineers, and artists alike.